tl;dr

I used the {rtweet} and {magick} R packages to fetch tweets of random satellite images of London and then reduced each one to a single representative colour.

Green/grey belt

I created the @londonmapbot Twitter bot to tweet out satellite images of random points in Greater London. You can read earlier posts about how it was made and how I mapped the points interactively.

I figured we could sample these to get to ‘the colours of London’, which can be mapped or tiled.

This is not too dissimilar to efforts to find the ‘average colour’ of countries of the world, which Erin wrote a nice post about, for example.1 The difference is that we aren’t finding a colour to represent London, we’re representing London with a series of single-colour points.

This is relatively trivial with the packages {rtweet} to pull tweets and {magick} to manipulate the images. We can use {sf} to place the points on a map and {ggplot2} for other visualisations.

Get bot log

First, load the packages we need. You’ll need to use install.packages() for each one if you haven’t already installed them.

library(rtweet)

library(magick)## Linking to ImageMagick 6.9.12.3

## Enabled features: cairo, fontconfig, freetype, heic, lcms, pango, raw, rsvg, webp

## Disabled features: fftw, ghostscript, x11library(tidyverse)## ── Attaching packages ─────────────────────────────────────── tidyverse 1.3.0 ──## ✓ ggplot2 3.3.3 ✓ purrr 0.3.4

## ✓ tibble 3.1.1 ✓ dplyr 1.0.6

## ✓ tidyr 1.1.3 ✓ stringr 1.4.0

## ✓ readr 1.4.0 ✓ forcats 0.5.1## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

## x dplyr::filter() masks stats::filter()

## x purrr::flatten() masks rtweet::flatten()

## x dplyr::lag() masks stats::lag()library(sf)## Linking to GEOS 3.8.1, GDAL 3.1.4, PROJ 6.3.1{rtweet} makes it very easy to collect tweet content. To the get_timeline() function you can pass an account name and the number of tweets you want to fetch. You’ll need to set up authenication first, of course.

tweets_read <- get_timeline("londonmapbot", n = 625)Why do I want 625? Well, the bot has tweeted out nearly 9000 images at time of writing, but I want a useable number for this post. (Spoiler: I also want to make a 25 by 25 grid of squares as one of my outputs.)

The function actually returns more than 625 because {rtweet} maximises the number of tweets it fetches for each API call. Better to return more than you asked for, rather than less.

The returned tibble contains a lot of information. I’m only interested in the media_url and text columns, from which I can extract the satellite image URLs and, with some regular expressions, the coordinate information that’s provided in the body of the tweet.

tweets <- tweets_read %>%

transmute(media_url = unlist(media_url), text) %>%

transmute(

media_url,

latitude = str_extract(text, "^\\d{2}.\\d{1,4}"),

longitude = str_extract(text, "(?<=, ).*(?=\nhttps)")

) %>%

slice(1:625)So we’ve got a tibble with 3 columns and 625 rows.

glimpse(tweets)## Rows: 625

## Columns: 3

## $ media_url <chr> "http://pbs.twimg.com/media/E7BOglJVgAEE3XL.jpg", "http://pb…

## $ latitude <chr> "51.5651", "51.4665", "51.3752", "51.5041", "51.5668", "51.3…

## $ longitude <chr> "0.0466", "-0.3526", "-0.1997", "-0.0174", "-0.1882", "-0.13…I’m going to iterate through each URL to download the associated image to a temporary directory. I’ve used a walk() function from {purrr} rather than map() because we aren’t returning anything; we’re saving a file to a folder.

Specifically, I used walk2(), which lets me supply two values to the iterate process: the URL and also the iteration number for that URL. That means I can print a message in the form ‘Fetching 1 of 625’ and get a rough idea of progress.

I’ve also added a Sys.sleep() call to slow the process, as not to hammer the Twitter API too hard.2

# Function: download images from URLs

download_images <- function(paths, dir) {

Sys.sleep(sample(0:2, 1)) # random pause

tw_df <- data.frame(number = 1:length(paths), url = paths)

purrr::walk2(

tw_df$number, tw_df$url,

~ { cat("Fetching", .x, "of", length(tw_df$number), "\n")

download.file(.y, file.path(dir, basename(.y))) }

)

}So, you can pass a vector of URLs and a directory path to the function. For purposes of this post, I’m going to save the files to a temporary folder.

That call takes a little while and the duration will vary given the random pauses built into the function. I’ve hidden the output because there would be 625 items printed to the console. An example of the output:

Fetching 479 of 625

trying URL 'http://pbs.twimg.com/media/E6Akw2fXMAA3VSk.jpg'

Content type 'image/jpeg' length 113537 bytes (110 KB)

==================================================

downloaded 110 KBTo prove this has worked, we can fetch all the image paths from the directory in which they’re stored and count how many there are.

files <- list.files(tmp, ".jpg$", full.names = TRUE)

length(files)## [1] 625Great, as expected. Now we have a set of satellite images that we can manipulate.

Demo: one image

As a demo, let’s take a look at the first image.

ex_in <- image_read(files[1])

ex_in

Now we can crop out the logos, reduce its colours and resize it using functions from the {magick} package.

‘Quantization’ is the process we’ll use on each image; it’s basically the algorithmic simplification of an image to the colours that best represent it. You could, for example, use this for reducing the number of colours in an image to make it easier to compress while minimising information loss. We’re going to quantize to just one colour to find the colour that best represents the image. Note that this isn’t the same as ‘taking an average colour’.

ex_square <- ex_in %>%

image_crop("x420-0") %>%

image_quantize(1) %>%

image_resize("100x100!")

ex_square

So the colour of that square is what you get when you quantize the original satellite image down to one colour. What is that colour? We can extract the hex code.

ex_rgb <- image_data(ex_square, channels = "rgb")[1:3]

ex_hex <- toupper(paste0("#", paste(as.character(ex_rgb), collapse = "")))

ex_hex## [1] "#48503E"Of course, we can generally expect that the colour will be somewhere between very green (city fringes, parks, golf courses) and very grey (urban), while some may be more blue (reservoirs).

All images

The image_*() functions in {magick} are generally vectorised, so we can pass it all of the paths to our files and apply the wrangling steps across all of the images at once.

imgs_in <- image_read(files)

imgs <- image_crop(imgs_in, "x420-0")I want to grab the single quantized hex value representing each image.

imgs_dat <- imgs %>% image_quantize(1) %>% image_resize("1x1!")

hex_dat <- map(1:625, ~image_data(imgs_dat, "rgb", frame = .x))

hex_cols <- hex_dat %>%

map_chr(~paste0("#", toupper(paste(.[1:3], collapse = ""))))

head(hex_cols)## [1] "#48503E" "#535C3F" "#435034" "#415534" "#5D6152" "#535F44"Now we can bind these to our tweets dataset.

tweets_cols <- tweets %>% bind_cols(hex = hex_cols)

glimpse(tweets_cols)## Rows: 625

## Columns: 4

## $ media_url <chr> "http://pbs.twimg.com/media/E7BOglJVgAEE3XL.jpg", "http://pb…

## $ latitude <chr> "51.5651", "51.4665", "51.3752", "51.5041", "51.5668", "51.3…

## $ longitude <chr> "0.0466", "-0.3526", "-0.1997", "-0.0174", "-0.1882", "-0.13…

## $ hex <chr> "#48503E", "#535C3F", "#435034", "#415534", "#5D6152", "#535…Visualisation: map

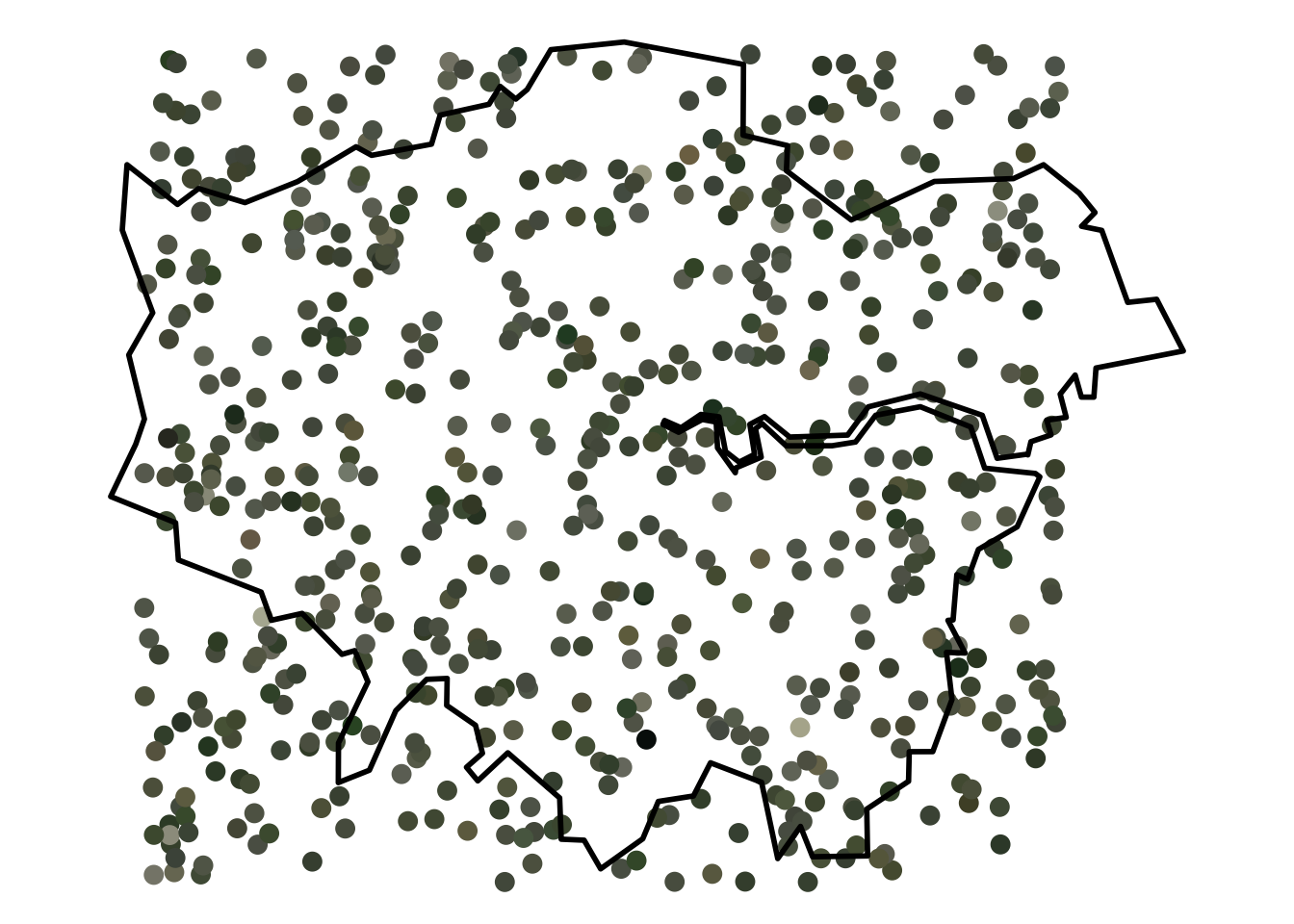

The obvious thing to do is to create a map with each point marking the location of a satellite image tweeted by londonmapbot, filled with the single representative colour for that image.

The bot samples from a square roughly covering Greater London within the M25, so it might be nice to show the outline of London for reference. The {sf} package makes it straightforward to read a GeoJSON of the NUTS1 boundaries for the UK via the Open Geography Portal API, then convert it to latitude-longitude coordinates and filter for London only.

nuts_path <- "https://opendata.arcgis.com/datasets/01fd6b2d7600446d8af768005992f76a_4.geojson"

ldn_sf <- st_read(nuts_path) %>%

st_transform(crs = 4326) %>%

filter(nuts118nm == "London")## Reading layer `NUTS_Level_1_(January_2018)_Boundaries' from data source `https://opendata.arcgis.com/datasets/01fd6b2d7600446d8af768005992f76a_4.geojson' using driver `GeoJSON'

## Simple feature collection with 12 features and 9 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: -8.649996 ymin: 49.88234 xmax: 1.762942 ymax: 60.86078

## Geodetic CRS: WGS 84And we can convert our tweets tibble to an sf-class spatial object as well, given that it contains coordinate information.

tweets_sf <- tweets_cols %>%

st_as_sf(coords = c("longitude", "latitude"), crs = 4326)Then it’s a case of adding these to a map, which in this case is a {ggplot2} object. The geom_sf() function is great at accepting and understanding polygons and points.

ggplot() +

geom_sf(data = tweets_sf, col = hex_cols, size = 3) +

geom_sf(data = ldn_sf, alpha = 0, size = 1, col = "black") +

theme_void() Are there any patterns here? Maybe it’s greener in the suburbs? (It’s a serious question; I’m a deuteranope.)3

Are there any patterns here? Maybe it’s greener in the suburbs? (It’s a serious question; I’m a deuteranope.)3

Visualisation: tiles

Recently I’ve written some posts involving R and abstract art (like pixel art and a Shiny app to remix art by Sol LeWitt).

So why not get more abstract with these data points? We can create squares of each colour and tile them.

Here the tiles are laid out row by row from right to left, in a more-or-less random order.

hex_tiles <- crossing(x = 1:25, y = 1:25) %>%

bind_cols(hex = tweets_cols$hex)

ggplot() +

geom_tile(aes(hex_tiles$x, hex_tiles$y), fill = hex_tiles$hex) +

theme_void()

For fans of order, we could instead arrange them by brightness, or ‘luminance’.4 Here I’ve modified a simple approach by Wouter van der Bijl from a StackOverflow post.

# Get luminance for hex values

rgb_vals <- col2rgb(tweets_cols$hex) # Hex to RGB

lab_vals <- convertColor(t(rgb_vals), 'sRGB', 'Lab') # RGB to Lab

hex_lum <- tweets_cols$hex[order(lab_vals[, 'L'])] # luminance order

# Set up dummy xy tile locations

cross_xy <- crossing(y = 1:25, x = 1:25)

# Create tibble of x, y, hex luminance

hex_tiles_bright <- tibble(

x = cross_xy$x,

y = rev(cross_xy$y),

hex = hex_lum

)

# Plot so 'lightest' in top left, 'darkest' in bottom right

ggplot(hex_tiles_bright) +

geom_tile(aes(x, y), fill = rev(hex_tiles_bright$hex)) +

theme_void()

Stunning, eh? Kinda?

The colours make me think of the classic smoggy ‘pea souper’ of London in times past, which is fitting.

Of course, there’s many more data available in the londonmapbot feed and many other ways to visualise these data, so I may return to this idea in the future.

Session info

## ─ Session info ───────────────────────────────────────────────────────────────

## setting value

## version R version 4.0.3 (2020-10-10)

## os macOS Mojave 10.14.6

## system x86_64, darwin17.0

## ui X11

## language (EN)

## collate en_GB.UTF-8

## ctype en_GB.UTF-8

## tz Europe/London

## date 2021-07-24

##

## ─ Packages ───────────────────────────────────────────────────────────────────

## package * version date lib source

## assertthat 0.2.1 2019-03-21 [1] CRAN (R 4.0.2)

## backports 1.2.1 2020-12-09 [1] CRAN (R 4.0.2)

## blogdown 0.21 2020-10-11 [1] CRAN (R 4.0.2)

## bookdown 0.21 2020-10-13 [1] CRAN (R 4.0.2)

## broom 0.7.6 2021-04-05 [1] CRAN (R 4.0.2)

## bslib 0.2.4 2021-01-25 [1] CRAN (R 4.0.2)

## cellranger 1.1.0 2016-07-27 [1] CRAN (R 4.0.2)

## class 7.3-17 2020-04-26 [2] CRAN (R 4.0.3)

## classInt 0.4-3 2020-04-07 [1] CRAN (R 4.0.2)

## cli 2.5.0 2021-04-26 [1] CRAN (R 4.0.2)

## colorspace 2.0-1 2021-05-04 [1] CRAN (R 4.0.2)

## crayon 1.4.1 2021-02-08 [1] CRAN (R 4.0.2)

## DBI 1.1.1 2021-01-15 [1] CRAN (R 4.0.2)

## dbplyr 2.1.0 2021-02-03 [1] CRAN (R 4.0.2)

## digest 0.6.27 2020-10-24 [1] CRAN (R 4.0.2)

## dplyr * 1.0.6 2021-05-05 [1] CRAN (R 4.0.2)

## e1071 1.7-6 2021-03-18 [1] CRAN (R 4.0.2)

## ellipsis 0.3.2 2021-04-29 [1] CRAN (R 4.0.2)

## evaluate 0.14 2019-05-28 [1] CRAN (R 4.0.1)

## fansi 0.4.2 2021-01-15 [1] CRAN (R 4.0.2)

## farver 2.1.0 2021-02-28 [1] CRAN (R 4.0.2)

## forcats * 0.5.1 2021-01-27 [1] CRAN (R 4.0.2)

## fs 1.5.0 2020-07-31 [1] CRAN (R 4.0.2)

## generics 0.1.0 2020-10-31 [1] CRAN (R 4.0.2)

## ggplot2 * 3.3.3 2020-12-30 [1] CRAN (R 4.0.2)

## glue 1.4.2 2020-08-27 [1] CRAN (R 4.0.2)

## gtable 0.3.0 2019-03-25 [1] CRAN (R 4.0.2)

## haven 2.4.0 2021-04-14 [1] CRAN (R 4.0.2)

## highr 0.9 2021-04-16 [1] CRAN (R 4.0.2)

## hms 1.0.0 2021-01-13 [1] CRAN (R 4.0.2)

## htmltools 0.5.1.1 2021-01-22 [1] CRAN (R 4.0.2)

## httr 1.4.2 2020-07-20 [1] CRAN (R 4.0.2)

## jquerylib 0.1.3 2020-12-17 [1] CRAN (R 4.0.2)

## jsonlite 1.7.2 2020-12-09 [1] CRAN (R 4.0.2)

## KernSmooth 2.23-17 2020-04-26 [2] CRAN (R 4.0.3)

## knitr 1.32 2021-04-14 [1] CRAN (R 4.0.2)

## labeling 0.4.2 2020-10-20 [1] CRAN (R 4.0.2)

## lifecycle 1.0.0 2021-02-15 [1] CRAN (R 4.0.2)

## lubridate 1.7.10 2021-02-26 [1] CRAN (R 4.0.2)

## magick * 2.7.1 2021-03-20 [1] CRAN (R 4.0.2)

## magrittr 2.0.1 2020-11-17 [1] CRAN (R 4.0.2)

## modelr 0.1.8 2020-05-19 [1] CRAN (R 4.0.2)

## munsell 0.5.0 2018-06-12 [1] CRAN (R 4.0.2)

## pillar 1.6.0 2021-04-13 [1] CRAN (R 4.0.2)

## pkgconfig 2.0.3 2019-09-22 [1] CRAN (R 4.0.2)

## png 0.1-7 2013-12-03 [1] CRAN (R 4.0.2)

## proxy 0.4-25 2021-03-05 [1] CRAN (R 4.0.2)

## purrr * 0.3.4 2020-04-17 [1] CRAN (R 4.0.2)

## R6 2.5.0 2020-10-28 [1] CRAN (R 4.0.2)

## Rcpp 1.0.6 2021-01-15 [1] CRAN (R 4.0.2)

## readr * 1.4.0 2020-10-05 [1] CRAN (R 4.0.2)

## readxl 1.3.1 2019-03-13 [1] CRAN (R 4.0.2)

## reprex 1.0.0 2021-01-27 [1] CRAN (R 4.0.2)

## rlang 0.4.11 2021-04-30 [1] CRAN (R 4.0.2)

## rmarkdown 2.7 2021-02-19 [1] CRAN (R 4.0.2)

## rstudioapi 0.13 2020-11-12 [1] CRAN (R 4.0.2)

## rtweet * 0.7.0 2020-01-08 [1] CRAN (R 4.0.2)

## rvest 0.3.6 2020-07-25 [1] CRAN (R 4.0.2)

## sass 0.3.1 2021-01-24 [1] CRAN (R 4.0.2)

## scales 1.1.1 2020-05-11 [1] CRAN (R 4.0.2)

## sessioninfo 1.1.1 2018-11-05 [1] CRAN (R 4.0.2)

## sf * 0.9-8 2021-03-17 [1] CRAN (R 4.0.2)

## stringi 1.6.1 2021-05-10 [1] CRAN (R 4.0.2)

## stringr * 1.4.0 2019-02-10 [1] CRAN (R 4.0.2)

## tibble * 3.1.1 2021-04-18 [1] CRAN (R 4.0.2)

## tidyr * 1.1.3 2021-03-03 [1] CRAN (R 4.0.2)

## tidyselect 1.1.1 2021-04-30 [1] CRAN (R 4.0.2)

## tidyverse * 1.3.0 2019-11-21 [1] CRAN (R 4.0.2)

## units 0.7-1 2021-03-16 [1] CRAN (R 4.0.2)

## utf8 1.2.1 2021-03-12 [1] CRAN (R 4.0.2)

## vctrs 0.3.8 2021-04-29 [1] CRAN (R 4.0.2)

## withr 2.4.2 2021-04-18 [1] CRAN (R 4.0.2)

## xfun 0.22 2021-03-11 [1] CRAN (R 4.0.2)

## xml2 1.3.2 2020-04-23 [1] CRAN (R 4.0.2)

## yaml 2.2.1 2020-02-01 [1] CRAN (R 4.0.2)

##

## [1] /Users/matt.dray/Library/R/4.0/library

## [2] /Library/Frameworks/R.framework/Versions/4.0/Resources/libraryI didn’t find Erin’s post until after starting my post, but I see that there are similarities in tools: Erin makes use of many of the {magick} functions that I have, for example. This makes me think I’ve used a sensible approach.↩︎

I’m being relatively polite by doing this, it’s probably not strictly necessary.↩︎

As in the ‘nope’ to green/brown/red sort of colourblindness.↩︎

Colour is a hard concept and the idea of ‘brightness’ is no exception. We’re keeping things naïve here.↩︎